We conclude that our sample based gradient ascent method for critic optimization is a fast and highly effective means of learning reward functions for use with reinforcement learning agents. Starting from a random reward function, our gradient ascent critic optimization is able to find high performing reward functions which are competitive with ones that are hand crafted and those found through exhaustive search. We evaluate this method on three domains: a simple three room gridworld, the hunger-thirst domain, and the boxes domain. With each iteration, the gradient is computed based upon a trajectory of experience, and the reward function is updated. We use ordered derivatives, in a process similar to back propagation through time, to compute the gradient of an agent's fitness with respect to its reward function. The focus of this problem is on improving an agent's critic, so as to increase performance over a distribution of tasks. Abstract In this paper, we address the critic optimization problem within the context of reinforcement learning. These computational results suggest that near-optimal solutions can be reached more effectively and efficiently using SGHC algorithms.ġ. For comparison purposes, the associated generalized hill-climbing (GHC) algorithms are applied to the individual discrete optimization problems in the sets. Computational results using the SGHC algorithm for randomly generated problems for two of these examples are presented. This paper discusses effective strategies for three examples of sets of related discrete optimization problems (a set of traveling salesman problems, a set of permutation flow shop problems, and a set of MAX 3-satisfiability problems). SGHC algorithms are motivated by a discrete manufacturing process design optimization problem (that is used throughout the paper to illustrate the concepts needed to implement a SGHC algorithm). However, effective strategies are often apparent based on the problem description. The information used is determined by the practitioner for the particular set of problems under study. When an SGHC algorithm moves between discrete optimization problems, information gained while optimizing the current problem is used to set the initial solution in the subsequent problem. SGHC algorithms probabilistically move between a set of related discrete optimization problems during their execution according to a problem probability mass function. Many well-known heuristics can be embedded within the SGHC algorithm framework, including simulated annealing, pure local search, and threshold accepting (among others). This works in all modern versions of OS X, from OS X Lion to Mountain Lion, Mavericks, OS X Yosemite, you name it, it’s supported post-Lion.This paper introduces simultaneous generalized hill-climbing (SGHC) algorithms as a framework for simultaneously addressing a set of related discrete optimization problems using heuristics.

MACTERM FONT PLUS

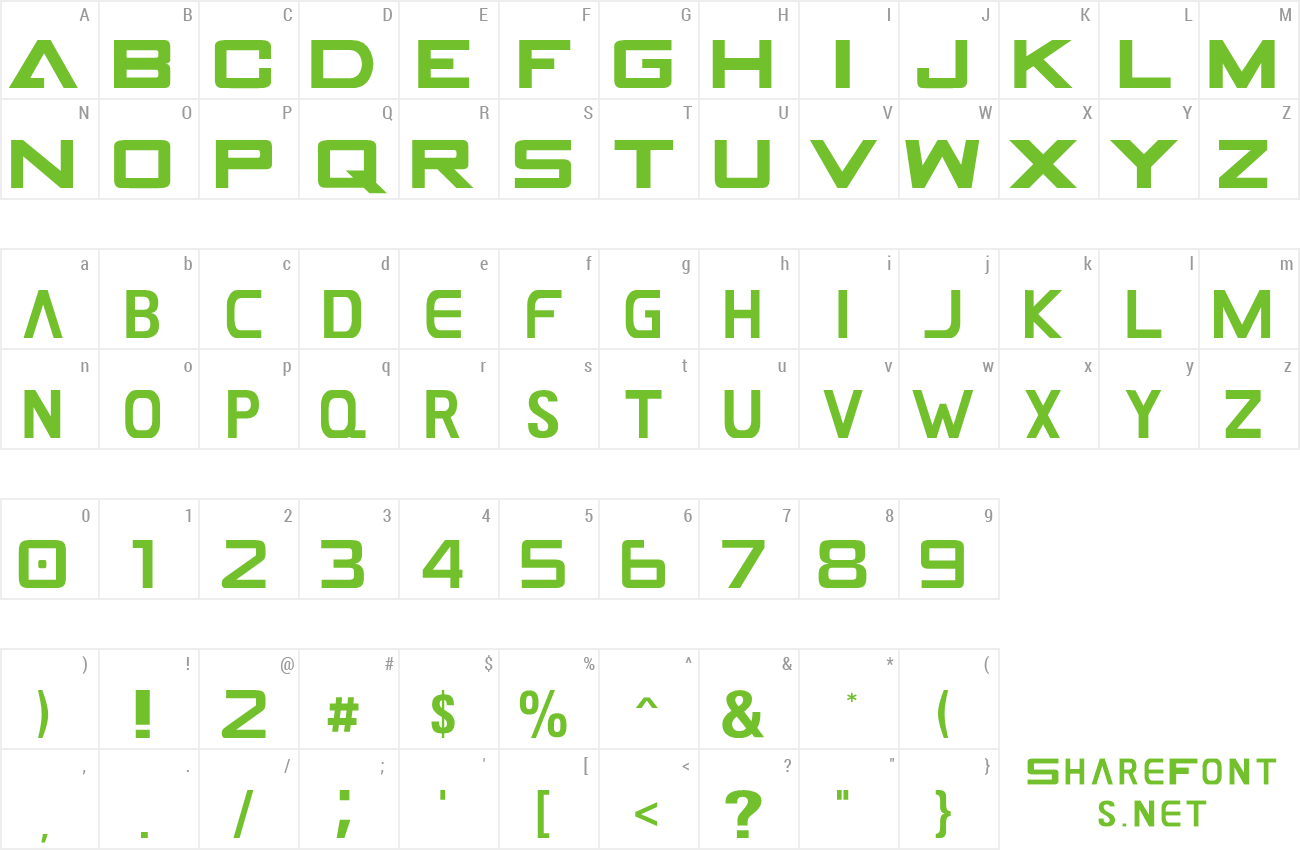

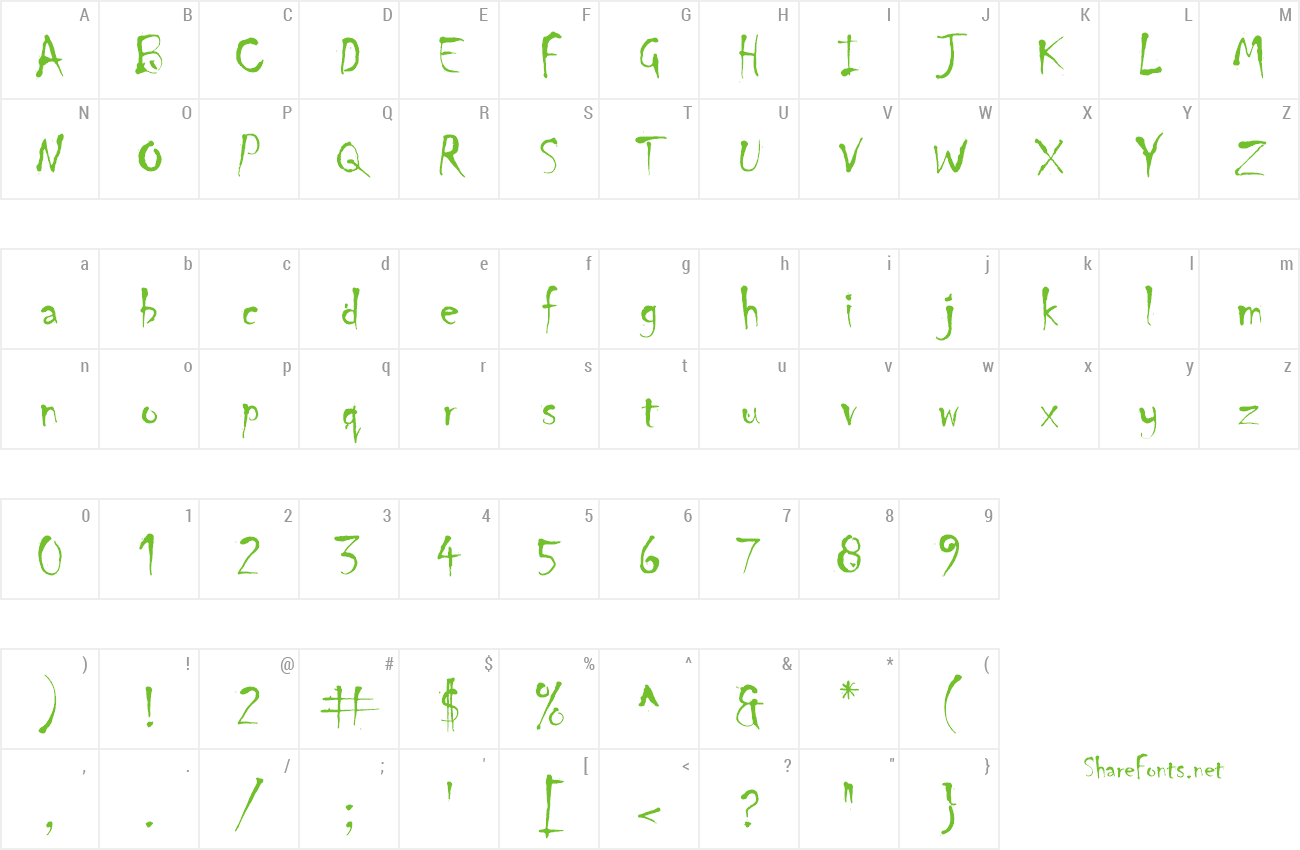

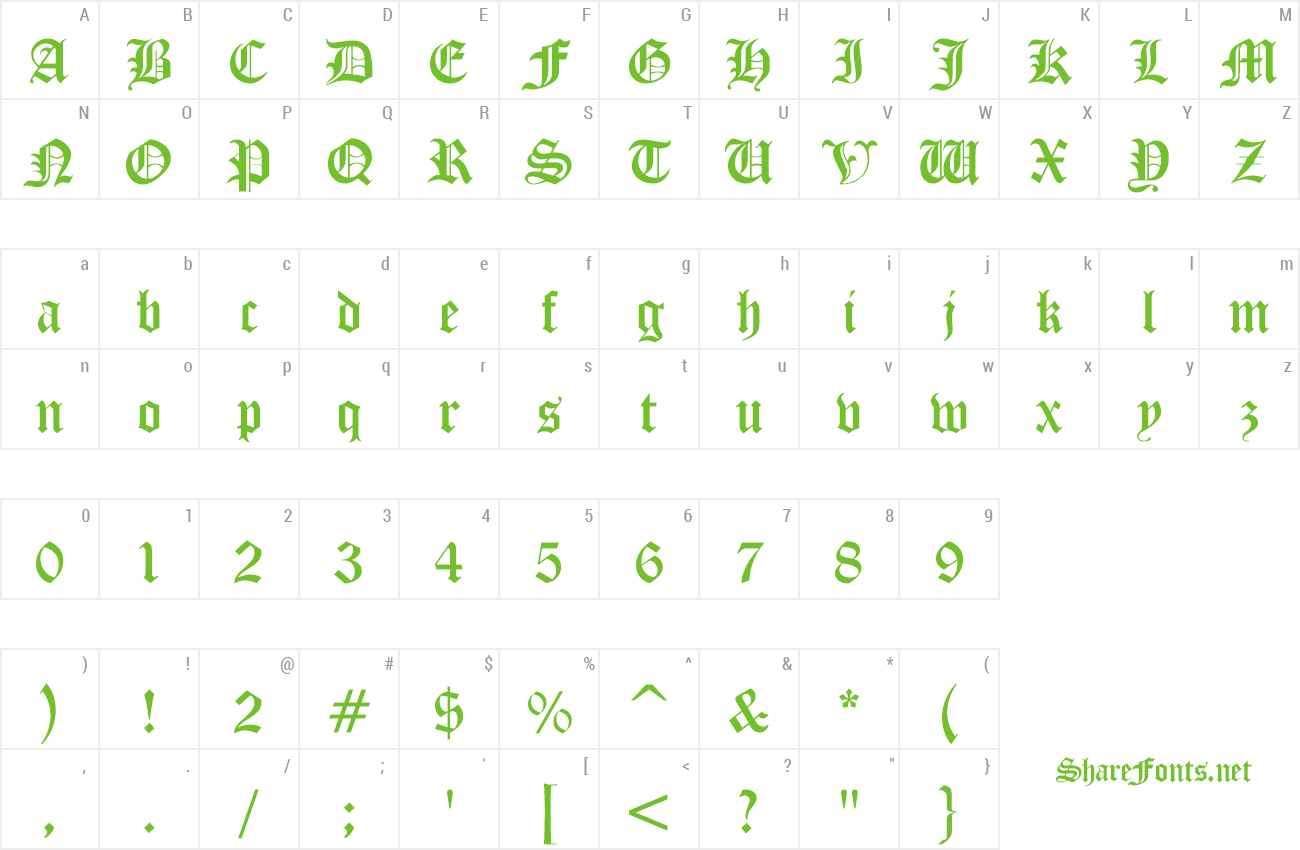

While only marginally useful, this does allow for an additional degree of user experience customization, which is always a plus in our book. I’m a big fan of Menlo Regular 11 and 12, but the world of ugly fonts is now open to you, including Dingbats and Emoji characters if you really want to get stupid.

MACTERM FONT WINDOWS

While you’re in a Terminal themes settings, you can change the background picture of Terminal windows too, which is a nice effect.

Perhaps more helpful than changing the font is the ability to adjust font and line spacing. Choose “Font” and make the change to the terminal font as desiredĪs long as you are actively using the theme you are adjusting, the changes take effect immediately in a live fashion.Choose Settings, then select a theme and go to the Text tab.Open “Preferences” from the Terminal app menu.You’ll obviously want to use something that is readable: You can pick any font you want to become the new default in Terminal, or you can assign the font change to specific profiles.

MACTERM FONT HOW TO

How to change the Terminal font in Mac OS X

0 kommentar(er)

0 kommentar(er)